On the cloud cold starts, whereby an app or service is off and needs to be started from zero the moment a request to it is sent, are a real pain: we humans are not particularly patient, and having to wait while a service are being brought up makes us complain, or even worse, go to a competing service or website.

Cold starts are a factor of all the components that stand between you, the user, and the actual service; this includes, but it’s not limited to, components like load balancers, controllers, virtual machine monitors, and then boot times for general purpose operating systems like Linux..

But even assuming that you could have that entire chain act in milliseconds (for a write-up on how this is actually possible see here), you’d still get hit by the application start time. Now, for nimble, performance oriented applications such as the NGINX web server, such start time can be counted in the few milliseconds; in this case, count your lucky stars, as you’re not running what we call a “heavy” app.

In many other cases however, applications, and by applications we mean the frameworks and languages they depend on to run, can take hundreds of milliseconds, seconds, or even sometimes minutes to initialize. For example:

- Heavyweight languages like Java which include just-in-time compilers that take a while to initialize;

- Heavyweight frameworks like Node or Ruby-on-Rails that are slow to initialize;

- Applications which themselves take a while to init (e.g., think of an app that needs to pre-populate its data from a remote database).

Not to mention that there’s a linear relationship between how much memory an app needs to run, and how long it takes to start the virtual machine it runs on (and yes, in almost all cases, your app is running on a virtual machine on public clouds).

Enter Snapshotting, or How to Get out of the Heavy App Bog

To address the issue, Unikraft Cloud leverages a standard virtualization mechanism called snapshotting. Snapshotting is done by what’s called the virtual machine monitor (VMM), which you can think of as an agent that manages all virtual machines on a host (examples of VMMs are QEMU or Firecracker on Linux/KVM, xl/libxl on Xen, etc.). When taking a snapshot, the VMM will (transparently) pause the running VM (and so the app too), take a memory snapshot of the VM’s entire memory, and (transparently) unpause the VM.

By leveraging snapshotting, and as you probably guessed, what we can do is:

- Deploy the app and let it fully initialize (which make takes seconds or minutes);

- Detect when it’s ready (e.g., by seeing whether a listening port is ready for requests);

- Pause the instance, take a snapshot and then setting the instance state to standby (scale-to-zero);

With this in place, when a request now comes in, instead of going through the entire initialization process, we can now wake the VM and app from the initialized snapshot. The process is illustrated in the following diagram:

The end result is cold-starts, scale-to-zero and autoscale in 10s of milliseconds instead of seconds or minutes (assuming the entire cloud stack is optimized, as previously mentioned). Even serverless offerings such as AWS Lambda struggle to provide fast cold starts for heavy apps:

(And yes, Lambda has something called SnapStart, but it only applies to Java; on Unikraft Cloud, the mechanism is agnostic to the app or service being deployed).

One added benefit of snapshots is that they also allow us to optionally provide stateful scale-to-zero: the state of your app is kept across the different zero-to-one and one-to-zero wake-up/standby cycles. Or if this isn’t the desired behavior, it is entirely possible to have the fast start benefit of snapshotting without stateful semantics by always waking up from the initial snapshot.

Optimizing Snapshotting

I’ve so far painted a somewhat simplified version of how snapshotting is implemented on our platform; clearly taking big snapshots of things all the time wouldn’t scale, so we have a number of mechanisms in place to ensure that we can do so. Covering these would probably require an entire separate write-up, but briefly we do make use of incremental snapshots, whereby only the initial snapshot requires a full memory snapshot, whereas subsequent ones only save the difference between the current state and the previous one. We also have algorithms in place to ensure that we do not saturate the link between main memory (for running apps) and the NVMes/storage we use to save and restore the snapshots to and from.

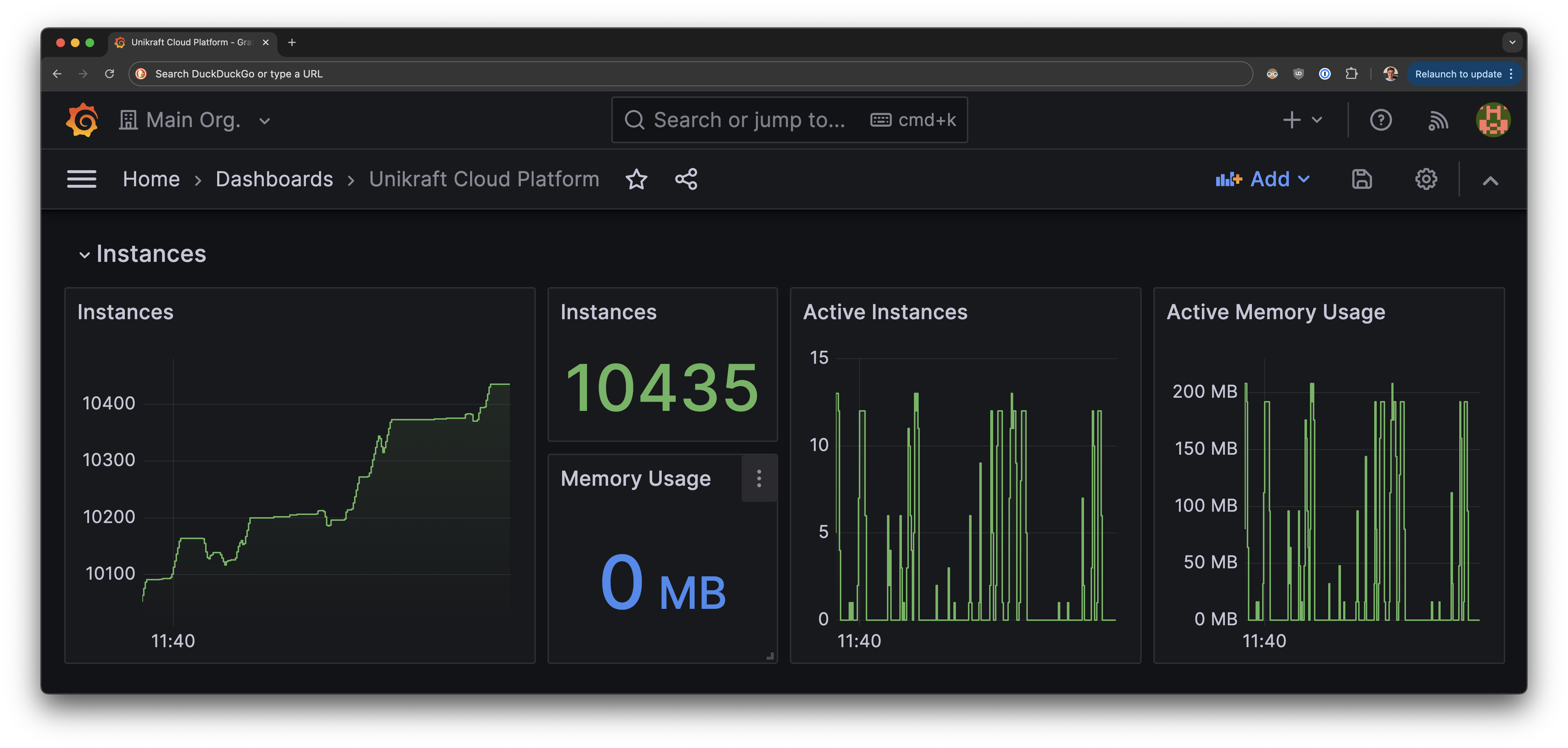

Putting it altogether results in screenshots such as these:

To explain a bit what’s going on here: this is a single server with, at the top, 10+ NextJS (heavy app) instances on standby/scaled to 0, so consuming no CPU nor memory resources (thus Grafna showing 0MB). The bottom half of the screenshot shows that the latest instance to have been (cold) started took about 12 milliseconds to wake up from its snapshot. We used NextJS as a heavy app but any other would have done, and we regularly run similar scale tests with apps based on SpringBoot, headless browsers, and many others. As previously mentioned, the mechanism is agnostic to the actual app being deployed.